AI Governance Maturity Model Medium

An ai governance maturity model medium perspective focuses on how organizations actually govern AI in real operating environments, not just how they describe intentions. It looks at whether decision ownership is clear, risks are reviewed consistently, and controls work in practice. Rather than abstract ethics, the model measures how governance evolves as AI use expands. This makes it useful for teams trying to understand whether their current approach can support broader, higher-impact AI deployment without increasing unmanaged risk.

Interest in an ai governance maturity model medium has increased as organizations move beyond pilots into everyday AI use. Informal approvals and ad hoc controls often break down once AI systems influence customers, employees, or financial outcomes. A maturity model helps teams assess where governance is strong, where it is fragile, and what practical improvement looks like. It creates a shared reference point for leadership, compliance, and delivery teams to discuss progress without relying on assumptions.

Much of the practical framing around an ai governance maturity model medium comes from practitioner-driven explanations rather than formal standards alone. These discussions emphasize observable behaviors, such as how risks are escalated or how accountability is enforced. They show governance as a gradual progression, not a checklist. This framing resonates with organizations that need governance to support responsible scale, rather than slow innovation through overly rigid controls.

What Is an AI Governance Maturity Model?

An AI governance maturity model is a structured way to measure how well an organization governs its AI systems.

It shows how governance practices progress from informal and reactive to structured, managed, and optimized.

How AI governance maturity models are defined

AI governance maturity models define clear stages of governance capability.

They assess how policies, oversight, and controls evolve as AI use grows.

- Stage-based frameworks tied to real operating practices

- Focus on governance, not AI performance

- Used for assessment, planning, and prioritization

What problems these models are designed to solve

These models address gaps created by fast AI adoption without proper oversight.

They help organizations understand where governance is weak or inconsistent.

- Unclear ownership of AI decisions

- Missing risk controls or approvals

- Inconsistent policies across teams

How maturity models differ from AI ethics principles

Maturity models focus on execution, not values alone.

Ethics principles explain what should matter; maturity models show how it is enforced.

- Ethics = intent and values

- Maturity = processes and accountability

- Governance = repeatable, auditable actions

Why “Medium” Content Shapes How AI Governance Maturity Is Explained

Thought leadership platforms influence how governance concepts are understood in practice.

Writers often translate complex governance ideas into operational language.

How thought leadership platforms influence governance frameworks

Platforms like Medium shape early understanding of governance models.

They focus on clarity and real-world application rather than formal compliance language.

- Practitioner-led explanations

- Emphasis on progression, not perfection

- Accessible framing for decision-makers

Common themes found in AI governance maturity discussions on Medium

Medium articles often highlight practical maturity signals.

They focus on how governance feels in day-to-day operations.

- Ownership clarity

- Decision escalation paths

- Risk reviews tied to business impact

Differences between Medium explanations and formal standards

Medium content simplifies, while standards formalize.

Both are useful, but they serve different needs.

- Medium = conceptual understanding

- Standards = regulatory alignment

- Models bridge both when applied carefully

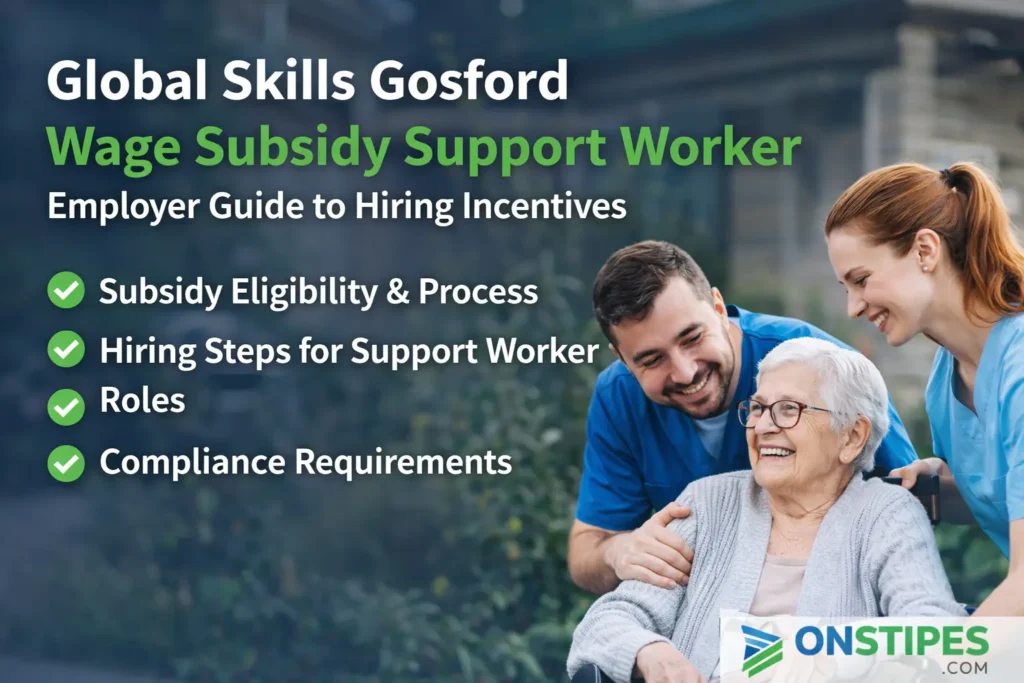

Also Read: Wage subsidy support worker employment services Gosford NSW

How an AI Governance Maturity Model Works in Practice

The model works by assessing current practices and mapping them to defined maturity stages.

It creates a shared view of governance strength and gaps.

Assessing current AI governance capability

Assessment starts by reviewing existing controls and behaviors.

This is a factual review, not a scoring exercise.

- Policies in use, not just written

- Who approves AI use cases

- How risks are identified and tracked

Mapping organizational practices to maturity stages

Practices are compared against stage definitions.

The goal is alignment, not label-chasing.

- Ad hoc decisions map to early stages

- Standard reviews indicate structured stages

- Integrated controls signal advanced maturity

Using maturity models as a continuous improvement tool

Maturity models guide improvement over time.

They are most effective when revisited regularly.

- Identify one-stage improvement targets

- Prioritize governance gaps with risk impact

- Track progress across quarters, not weeks

Core Stages in an AI Governance Maturity Model

Most models define clear stages that reflect governance sophistication.

The names vary, but the progression is consistent.

Ad hoc or initial governance stage

Governance is informal and reactive at this stage.

Decisions depend on individuals, not systems.

- No centralized oversight

- Limited documentation

- Risks addressed after issues appear

Defined and structured governance stage

Governance processes become repeatable and documented

Roles and approvals start to stabilize.

- Formal policies exist

- Review processes are defined

- Accountability is clearer

Managed and optimized governance stage

Governance is integrated into operations and strategy.

Controls are proactive and measurable.

- Continuous monitoring

- Risk metrics tied to outcomes

- Governance supports scale, not blocks it

Key Governance Domains Evaluated in Maturity Models

Maturity models assess governance across multiple domains.

Strong performance in one area does not offset weakness in others.

Policy, oversight, and accountability structures

Clear ownership is a core maturity signal.

Governance fails when responsibility is unclear.

- Defined decision-makers

- Escalation paths

- Board or executive visibility

Risk management and impact assessment

Risk processes mature as AI impact increases.

This includes both operational and societal risks.

- Use-case risk classification

- Pre-deployment assessments

- Ongoing risk reviews

Monitoring, transparency, and explainability

Advanced maturity requires visibility into AI behavior.

Monitoring moves beyond model performance alone.

- Logging and audit trails

- User transparency practices

- Explainability aligned to risk level

Also Read: Global Skills Gosford Wage Subsidy Support Worker

Roles and Responsibilities Across Governance Maturity Levels

Roles evolve as governance becomes more structured.

Early stages rely on individuals; mature stages rely on systems.

Executive and board-level accountability

Leadership accountability increases with maturity.

Executives set risk tolerance and governance direction.

- Policy approval

- Oversight reporting

- Strategic alignment

Risk, compliance, and legal functions

These teams formalize governance as maturity grows.

They translate expectations into controls.

- Regulatory interpretation

- Risk frameworks

- Control testing

Product, data, and AI development teams

Operational teams implement governance daily.

Their involvement determines real effectiveness.

- Design-time controls

- Documentation ownership

- Feedback into governance updates

Why AI Governance Maturity Matters for Organizations

Governance maturity directly affects risk, trust, and scalability.

Weak governance becomes visible as AI use expands.

Managing regulatory and compliance risk

Mature governance reduces regulatory surprises.

It supports readiness rather than last-minute fixes.

- Clear evidence trails

- Consistent processes

- Faster regulatory responses

Reducing ethical and reputational exposure

Strong governance lowers the chance of public failures.

Issues are caught earlier and handled consistently.

- Bias detection

- Responsible use checks

- Clear accountability

Supporting scalable and responsible AI adoption

Maturity enables growth without chaos.

Governance becomes an enabler, not a blocker.

- Faster approvals

- Predictable reviews

- Confidence to scale AI use

Benefits of Using an AI Governance Maturity Model

The model provides value across functions.

Each group gains clarity and direction.

Benefits for compliance and risk leaders

Risk teams gain structure and prioritization.

They move from reactive to planned governance.

- Clear benchmarks

- Risk-based focus

- Audit readiness

Benefits for business and product owners

Product teams gain predictability.

They know what is required before deployment.

- Fewer late-stage blockers

- Clear expectations

- Faster decision cycles

Benefits for technical and data teams

Technical teams gain clarity and consistency.

Governance becomes part of normal workflows.

- Defined documentation standards

- Stable review criteria

- Reduced rework

Best Practices for Applying an AI Governance Maturity Model

Effective use requires discipline and realism.

The model should guide action, not reporting only.

Aligning governance goals with business strategy

Governance targets should match AI ambition.

Over-engineering early stages slows adoption.

- Match controls to risk

- Focus on high-impact use cases

- Scale governance gradually

Involving cross-functional stakeholders early

Governance works best when shared.

Early involvement reduces friction later.

- Legal, risk, product alignment

- Shared definitions

- Agreed escalation paths

Reviewing and updating maturity assessments regularly

Governance maturity changes as AI use changes.

Regular reviews keep models relevant.

- Annual or semi-annual reviews

- Triggered reassessments after major changes

- Continuous feedback loops

Compliance and Regulatory Considerations in Governance Maturity

Maturity models support compliance when aligned properly.

They are not a replacement for regulatory requirements.

How maturity models support regulatory readiness

Mature governance creates evidence by design.

This reduces compliance effort over time.

- Documented processes

- Consistent approvals

- Traceable decisions

Aligning governance stages with emerging AI regulations

Regulations increasingly expect structured governance.

Maturity stages help map readiness.

- Risk-based controls

- Accountability clarity

- Ongoing monitoring

Documentation and audit readiness expectations

Documentation depth increases with maturity.

Not all use cases require the same level.

- Proportional documentation

- Clear version control

- Accessible audit trails

Common Mistakes and Risks When Using Maturity Models

Misuse of maturity models can create false confidence.

Awareness of common pitfalls is essential.

Treating maturity models as one-time assessments

Governance maturity is not static.

One-time scoring quickly becomes outdated.

- AI use evolves

- Risks change

- Controls drift

Over-focusing on policy without operational follow-through

Policies alone do not create governance.

Execution matters more than documentation.

- Unused policies

- Untrained teams

- Weak enforcement

Ignoring cultural and organizational readiness

Governance fails without cultural support.

People must understand and trust the process.

- Resistance to oversight

- Shadow AI use

- Inconsistent adoption

Tools and Frameworks That Support AI Governance Maturity

Tools can help, but only when maturity is ready.

Technology does not replace governance thinking.

Internal assessment frameworks and scorecards

Scorecards help standardize evaluation.

They support consistency across teams.

- Defined criteria

- Repeatable scoring

- Trend tracking

Governance workflows and documentation systems

Workflow tools support scale.

They reduce manual coordination.

- Approval routing

- Evidence storage

- Status visibility

When tooling helps and when it adds complexity

Tooling helps mature organizations more than early ones.

Premature tooling creates friction.

- Use tools after processes stabilize

- Avoid over-automation early

- Focus on usability

Practical Checklist for Advancing AI Governance Maturity

A simple checklist helps translate models into action.

It supports focused improvement.

Questions to assess your current governance stage

Start with factual questions.

Avoid assumptions.

- Who approves AI use cases?

- How are risks documented?

- What evidence exists today?

Indicators that signal readiness to move up a level

Progress shows through behavior changes.

Not through policy volume.

- Consistent reviews

- Clear ownership

- Fewer escalations

Warning signs of governance gaps

Certain signals indicate maturity issues.

They should trigger review.

- Conflicting decisions

- Missing documentation

- Unclear accountability

Comparing AI Governance Maturity Models to Alternative Approaches

Maturity models are one governance approach.

They are strongest when combined thoughtfully.

Maturity models vs. principle-based governance

Principles guide intent; maturity models guide execution.

Both are needed.

- Principles set direction

- Models operationalize behavior

- Together they align values and actions

Maturity models vs. risk-only frameworks

Risk frameworks focus on exposure, not capability growth.

Maturity models address progression.

- Risk = what could go wrong

- Maturity = how governance improves

- Both inform decisions

When a hybrid approach makes sense

Hybrid models balance flexibility and structure.

They work well in complex organizations.

- Principles for guidance

- Maturity stages for planning

- Risk tools for prioritization